1 Overview

The Dynamic Learning Maps® (DLM®) Alternate Assessment System assesses student achievement in English language arts (ELA), mathematics, and science for students with the most significant cognitive disabilities in grades 3–8 and high school. The purpose of the system is to improve academic experiences and outcomes for students with the most significant cognitive disabilities by setting high and actionable academic expectations and providing appropriate and effective supports to educators. Results from the DLM alternate assessment are intended to support interpretations about what students know and are able to do and to support inferences about student achievement in the given subject. Results provide information that can guide instructional decisions as well as information for use with state accountability programs.

The DLM system is developed and administered by Accessible Teaching, Learning, and Assessment Systems (ATLAS), a research center within the University of Kansas’s Achievement and Assessment Institute. The DLM system is based on the core belief that all students should have access to challenging, grade-level content. Online DLM assessments give students with the most significant cognitive disabilities opportunities to demonstrate what they know in ways that traditional paper-and-pencil assessments cannot.

A complete technical manual was created after the first operational administration of ELA and mathematics in 2014–2015, and a comprehensive refresh of the technical manual was published after the 2021–2022 administration. After each annual administration, a technical manual update is provided to summarize updated information. The current technical manual provides updates for the 2022–2023 administration. Only sections with updated information are included in this manual. For a complete description of the DLM assessment systems, refer to the 2021–2022 Technical Manual—Instructionally Embedded Model (Dynamic Learning Maps Consortium, 2022).

Due to differences in the development timeline for science, a separate technical manual was prepared for the DLM science assessment (see Dynamic Learning Maps Consortium, 2023a).

1.1 Current DLM Collaborators for Development and Implementation

During the 2022–2023 academic year, DLM assessments were available to students in the District of Columbia and 20 states: Alaska, Arkansas, Colorado, Delaware, Illinois, Iowa, Kansas, Maryland, Missouri, New Hampshire, New Jersey, New Mexico, New York, North Dakota, Oklahoma, Pennsylvania, Rhode Island, Utah, West Virginia, and Wisconsin. One partner, Colorado, only administered assessments in ELA and mathematics; one partner, District of Columbia, only administered assessments in science. The DLM Governance Board is composed of two representatives from the education agencies of each member state or district. Representatives have expertise in special education and state assessment administration. The DLM Governance Board advises on the administration, maintenance, and enhancement of the DLM system.

In addition to ATLAS and the DLM Governance Board, other key partners include the Center for Literacy and Disability Studies at the University of North Carolina at Chapel Hill and Assessment and Technology Solutions Previously known as Agile Technology Solutions. at the University of Kansas.

The DLM system is also supported by a technical advisory committee (TAC). The DLM TAC members possess decades of expertise, including in large-scale assessments, accessibility for alternate assessments, diagnostic classification modeling, and assessment validation. The DLM TAC provides advice and guidance on technical adequacy of the DLM assessments.

1.2 Assessment Models

Assessment blueprints consist of the Essential Elements (EEs) prioritized for assessment by the DLM Governance Board. To achieve blueprint coverage, each student is administered a series of testlets. The manner of administration varies by assessment model. Each testlet is delivered through an online platform, Kite® Student Portal. Student results are based on evidence of mastery of the linkage levels for every assessed EE.

There are two assessment models for the DLM system. Each state chooses its own model.

Instructionally Embedded model. There are two instructionally embedded testing windows: fall and spring. The assessment windows are structured so that testlets can be administered at appropriate points in instruction. Each window is approximately 15 weeks long. Educators have some choice of which EEs to assess, within constraints defined by the assessment blueprint. For each EE, the system recommends a linkage level for assessment, and the educator may accept the recommendation or choose another linkage level. Recommendations are based on information collected about the students’ expressive communication and academic skills. At the end of the year, summative results are based on mastery estimates for the assessed linkage levels for each EE (including performance on all testlets from both the fall and spring windows) and are used for accountability purposes. There are different pools of operational testlets for the fall and spring windows. In 2022–2023, the states adopting the Instructionally Embedded model were Arkansas, Delaware, Iowa, Kansas, Missouri, and North Dakota.

Year-End model. During a single operational testing window in the spring, all students take testlets that cover the whole blueprint. The window is approximately 13 weeks long, and test administrators may administer the required testlets throughout the window. Students are assigned their first testlet based on information collected about their expressive communication and academic skills. Each testlet assesses one linkage level and EE. The linkage level for each subsequent testlet varies according to student performance on the previous testlet. Summative assessment results reflect the student’s performance and are used for accountability purposes each school year. In states adopting the Year-End model, instructionally embedded assessments are available during the school year but are optional and do not count toward summative results. In 2022–2023, the states adopting the Year-End model were Alaska, Colorado, Illinois, Maryland, New Hampshire, New Jersey, New Mexico, New York, Oklahoma, Pennsylvania, Rhode Island, Utah, West Virginia, and Wisconsin.

Information in this manual is common to both models wherever possible and is specific to the Instructionally Embedded model where appropriate. The Year-End model has a separate technical manual.

1.3 Theory of Action and Interpretive Argument

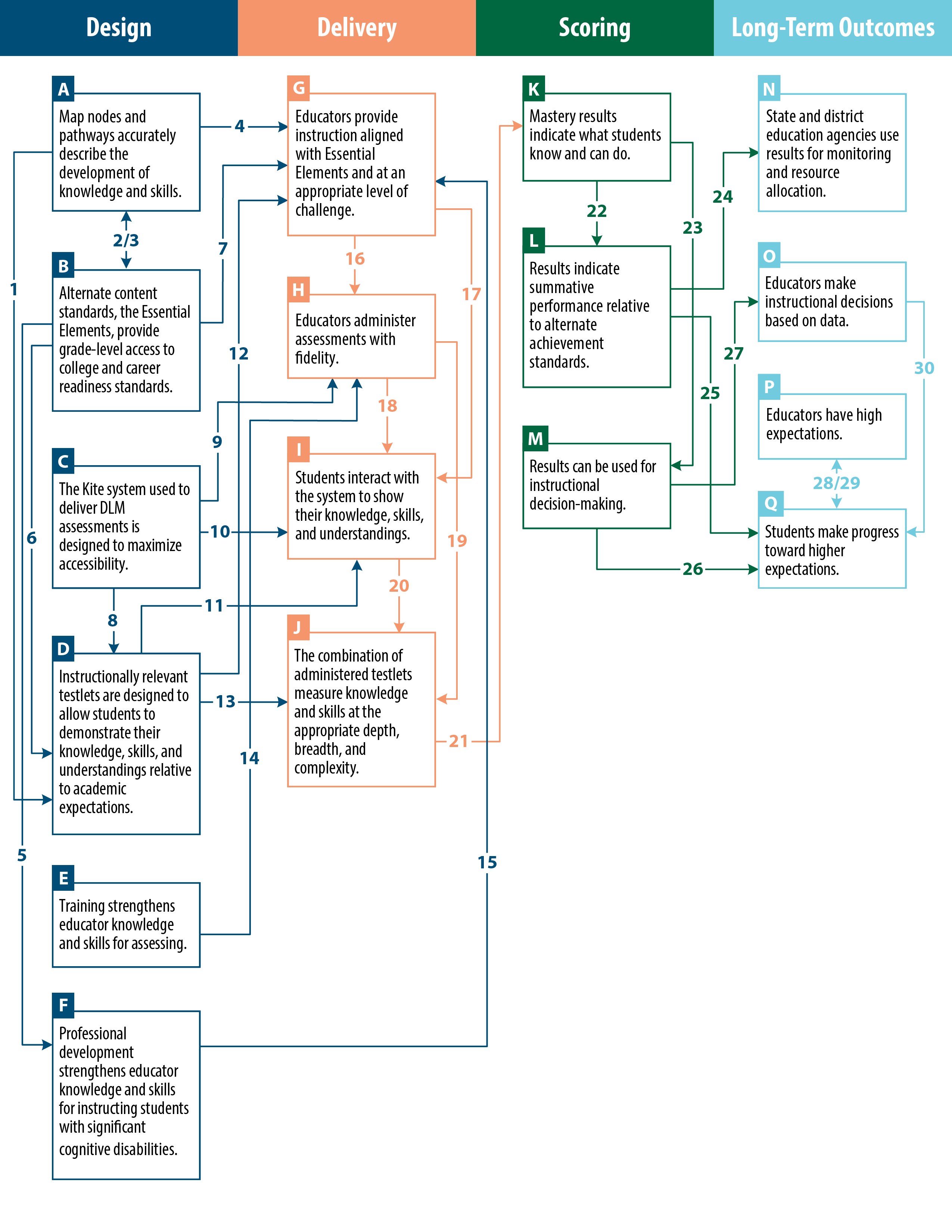

The theory of action that guided the design of the DLM system was formulated in 2011, revised in December 2013, and revised again in 2019. It expresses the belief that high expectations for students with the most significant cognitive disabilities, combined with appropriate educational supports and diagnostic tools for educators, result in improved academic experiences and outcomes for students and educators.

The DLM Theory of Action expresses a commitment to provide students with the most significant cognitive disabilities access to highly flexible cognitive and learning pathways and an assessment system that is capable of validly and reliably evaluating their achievement. Ultimately, students will make progress toward higher expectations, educators will make instructional decisions based on data, educators will hold higher expectations of students, and state and district education agencies will use results for monitoring and resource allocation.

Assessment validation for the DLM assessments uses a three-tiered approach, which includes the specifications of 1) the DLM Theory of Action defining statements in the validity argument that must be in place to achieve the goals of the system, 2) an interpretive argument defining propositions that must be evaluated to support each statement in the DLM Theory of Action, and 3) validity studies to evaluate each proposition in the interpretive argument.

After identifying these overall guiding principles and anticipated outcomes, specific elements of the DLM Theory of Action were articulated to inform assessment design and to highlight the associated validity argument. The DLM Theory of Action includes the assessment’s intended effects (long-term outcomes); statements related to design, delivery and scoring; and action mechanisms (i.e., connections between the statements). In Figure 1.1, the chain of reasoning in the DLM Theory of Action is demonstrated broadly by the order of the four sections from left to right. Design statements serve as inputs to delivery, which inform scoring and reporting, which collectively lead to the long-term outcomes for various stakeholders. The chain of reasoning is made explicit by the numbered arrows between the statements.

Figure 1.1: Dynamic Learning Maps Theory of Action

1.4 Technical Manual Overview

This manual provides evidence collected during the 2022–2023 administration of DLM assessment in ELA and mathematics.

Chapter 1 provides a brief overview of the DLM system, including collaborators for development and implementation, the DLM Theory of Action and interpretive argument, and a summary of contents of the remaining chapters. While subsequent chapters describe the individual components of the assessment system separately, key topics such as validity are addressed throughout this manual.

Chapter 2 was not updated for 2022–2023. For a full description of the development of EEs, learning maps, and other assessment structures, see the 2021–2022 Technical Manual—Instructionally Embedded Model (Dynamic Learning Maps Consortium, 2022).

Chapter 3 describes assessment design and development in 2022–2023, including a description of test development activities and external review of content. The chapter then presents evidence of item quality including results of field testing, operational item data, and differential item functioning.

Chapter 4 describes assessment delivery, including updated procedures and data collected in 2022–2023. The chapter provides information on administration time, device usage, linkage level selection, and accessibility support selections. The chapter also provides evidence from test administrators, including user experience with the DLM system and student opportunity to learn.

Chapter 5 provides a brief summary of the psychometric model used in scoring DLM assessments. This chapter includes a summary of the 2022–2023 calibrated parameters. For a complete description of the modeling method, see the 2021–2022 Technical Manual—Instructionally Embedded Model (Dynamic Learning Maps Consortium, 2022).

Chapter 6 was not updated for 2022–2023; no changes were made to the cut points used for determining performance levels on DLM assessments. See the 2021–2022 Technical Manual—Instructionally Embedded Model (Dynamic Learning Maps Consortium, 2022) for a description of the methods, preparations, procedures, and results of the original standard-setting meeting and the follow-up evaluation of the impact data.

Chapter 7 reports the 2022–2023 operational results, including student participation data. The chapter details the percentage of students achieving at each performance level; subgroup performance by gender, race, ethnicity, and English-learner status; and the percentage of students who showed mastery at each linkage level. Finally, the chapter describes changes to score reports and data files during the 2022–2023 administration.

Chapter 8 summarizes reliability evidence for the 2022–2023 administration, including a brief overview of the methods used to evaluate assessment reliability and results by linkage level, EE, conceptual area, subject (overall performance), and student groups. For a complete description of the reliability background and methods, see the 2021–2022 Technical Manual—Instructionally Embedded Model (Dynamic Learning Maps Consortium, 2022).

Chapter 9 describes updates to the professional development offered across states administering DLM assessments in 2022–2023, including participation rates and evaluation results.

Chapter 10 synthesizes the evidence provided in the previous chapters. It evaluates how the evidence supports the claims in the DLM Theory of Action as well as the long-term outcomes. The chapter ends with a description of our future research and ongoing initiatives for continuous improvement.